FREEsubNET European Project

Datasets

Datasets for people tracking:

Kinect Tracking Precision (KTP) dataset

IAS-Lab People Tracking dataset

Datasets for person re-identification:

BIWI RGBD-ID dataset

IAS-Lab RGBD-ID dataset

Datasets for action recognition:

IAS-Lab Action dataset

Software

OpenPTrackOpenPTrack is an open source project led by UCLA REMAP and Open Perception. Key collaborators include the University of Padova and Electroland. The project kicked off in the Winter of 2013 to provide an open source solution for scalable, multi-imager person tracking for education, arts, and culture applications. An alpha version of the code is currently available in our GitHub repository and supports multi-imager tracking using the Microsoft Kinect 360, Kinect One, Mesa Imaging Swissranger cameras and stereo cameras. To be updated, follow: @OpenPTrack and @UCLAREMAP.

Language: C++.

Type of license: BSD.

Download here.

ROS-Industrial Human Tracker

I contributed code for people detection with RGB-D data as part of PCL code sprint sponsored by the Southwest Research Institute (SwRI), ROS-Industrial and the National Institute of Standards and Technology (NIST).

Language: C++.

Type of license: BSD.

Download here.

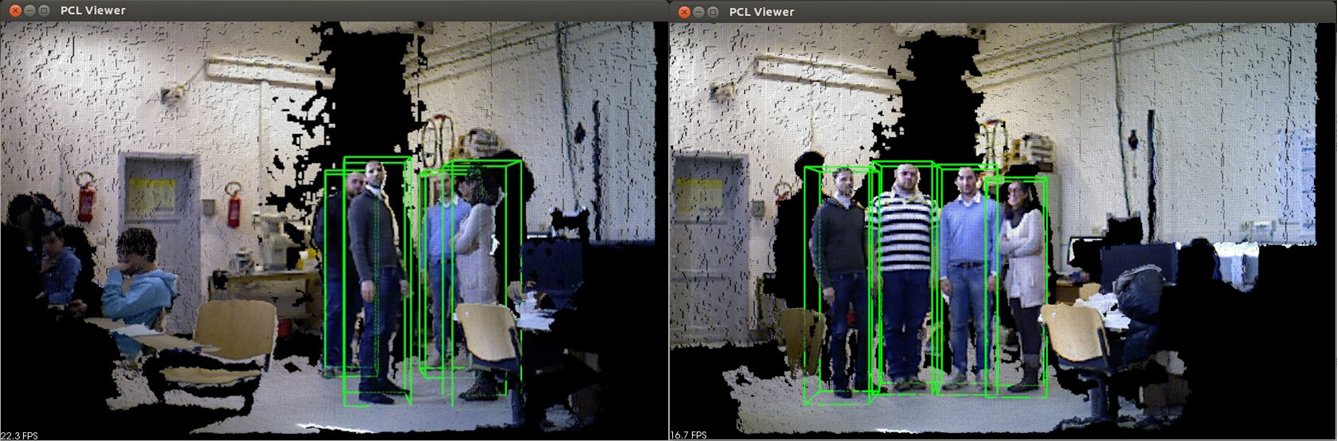

Detecting people on a ground plane with RGB-D data

I contributed code for people detection with RGB-D data as part of the people module of the Point Cloud Library.

Here it is a link to a tutorial explaining how to use this code for detecting people standing/walking on a planar ground plane in real time with standard CPU computation.

This implementation corresponds to the people detection algorithm for RGB-D data presented in:

M. Munaro, F. Basso, and E. Menegatti. "Tracking people within groups with RGB-D data". In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Algarve (Portugal), 2012.

Language: C++.

Type of license: BSD.

Download here.

Online Adaboost for creating target-specific color classifiers

Implementation of the Online Adaboost algorithm proposed in:

H. Grabner and H. Bischof. On-line boosting and vision. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, pages 260–267, Washington, DC, USA, 2006.

It comes with an example which learns target-specific color classifiers by using Online Adaboost and the color features described in:

F. Basso, M. Munaro, S. Michieletto and E. Menegatti. Fast and robust multi-people tracking from RGB-D data for a mobile robot.

In Proceedings of the 12th Intelligent Autonomous Systems (IAS) Conference, Jeju Island (Korea), 2012.

Language: C++.

Type of license: BSD.

Download here.

Projects

Analysis of populated environments with RGB-D data

Robots operating in populated environments must be endowed with fast and robust people detection, tracking and gesture/action recognition algorithms. Only recently RGB-D cameras that provide color and depth information at good resolution and at an affordable price have been released. This project aims at exploiting those data for making robots safe and ready for real world applications.

Related videos: video1, video2

Main references:

M. Munaro and E. Menegatti. Fast RGB-D people tracking for service robots. Journal on Autonomous Robots, Springer, vol. 37, no. 3, pp. 227-242, ISSN: 0929-5593, doi: 10.1007/s10514-014-9385-0, 2014. M. Munaro, F. Basso, and E. Menegatti. "Tracking people within groups with RGB-D data". In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Algarve (Portugal), pp. 2101-2107, 2012. F. Basso, M. Munaro, S. Michieletto and E. Menegatti. "Fast and robust multi-people tracking from RGB-D data for a mobile robot". In Proceedings of the 12th Intelligent Autonomous Systems (IAS) Conference, Jeju Island (Korea), vol. 193, pp. 265-276, 2012.

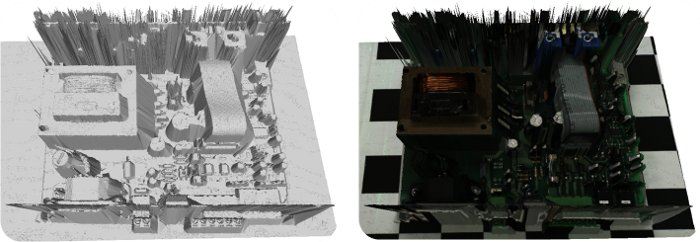

3D Complete - Efficient 3D Completeness Inspection (European Project)

This FP7 European project aims at developing efficient 3D completeness inspection methods that exploit two different technologies.

The first one is based on calculating arbitrary views of an object given a small number of images of this object,

the second one aims at combining 3D shape data with color and texture information. Both of the technologies will

automatically cover the full chain from data acquisition via pre-processing to the final decision-making.

The project focus also on using standard hardware to create a cost efficient technology.

At the IAS-Lab we developed a laser-triangulation system for acquiring 2.5D textured models of the objects to be inspected.

Here you can find an example of 2.5D model of a test case (left) together with its textured version (right).

Main references:

E. So, M. Munaro, S. Michieletto, E. Menegatti, S. Tonello. "3DComplete: Efficient Completeness Inspection using a 2.5D Color Scanner". Computers in Industry - Special Issue on 3D Imaging in Industry, Elsevier, 2013. E. So, M. Munaro, S. Michieletto, M. Antonello, E. Menegatti. "Real-Time 3D Model Reconstruction with a Dual-Laser Triangulation System for Assembly Line Completeness Inspection". In Proceedings of the 12th Intelligent Autonomous Systems (IAS) Conference, Jeju Island (Korea), 2012. M. Munaro, S. Michieletto, E. So, D. Alberton, E. Menegatti. " Fast 2.5D model reconstruction of assembled parts with high occlusion for completeness inspection". In Proceedings of the International Conference on Machine Vision, Image Processing, and Pattern Analysis (ICMVIPPA), Venice, Italy 2011.

Past projects

FREEsubNET was a FP6 European Project which I took part as a Marie Curie Early Stage Researcher

at IFREMER.

This project dealt with the development of computer vision and robotics algorithms for Intervention Autonomous Underwater Vehicles (I-AUVs).

In this project I worked on underwater image mosaicking and 2.5D reconstruction techniques and I took part to two sea missions

for testing mine and other algorithms with IFREMER's underwater vehicles.

Here below you can see three IFREMER's vehicles: the Human Operated Vehicle (HOV) Nautile,

the Remoted Operated Vehicle (ROV) Victor 6000

and the Autonomous Underwater Vehicle (AUV) AsterX.

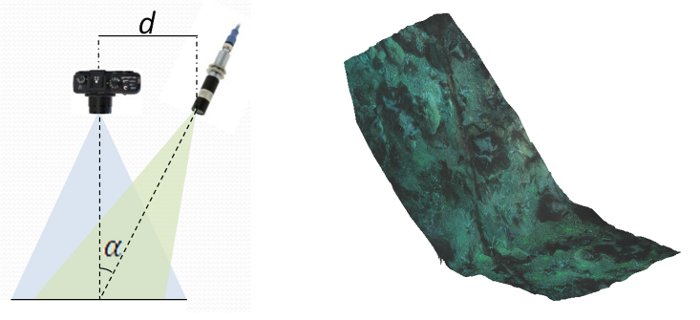

I developed an approach based on a laser-camera setup to perform accurate underwater three dimensional

reconstruction from still photos acquired by an Autonomous Underwater Vehicle (AUV). In particular laser information extracted

from the images, and coupled with conventional dead reckoning navigation techniques, was used for building

three dimensional textured models of the seabed when a feature-based approach failed because of lack of distinctive image features.

Here the principle of laser-camera triangulation is reported (left) and a reconstruction of an underwater scene is shown (right).

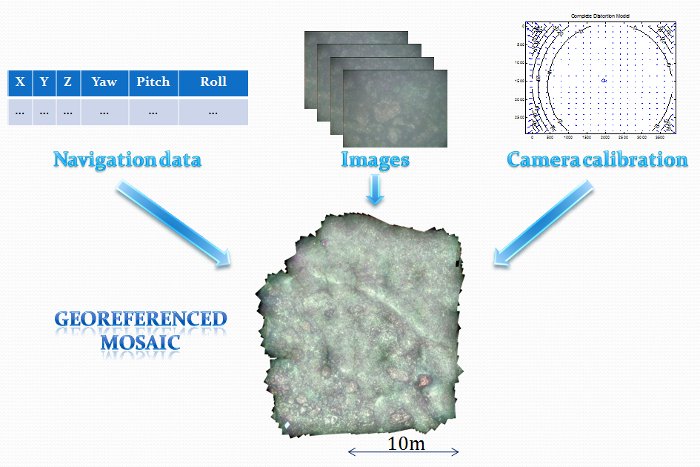

Image mosaicking is an hard task in the underwater domain. Images must be collected near the sea bottom due to the effect of water absorption

and the position of the vehicle cannot be estimated in a precise way, because it is subject to errors that increase with the dive length.

I implemented an image mosaicking algorithm that estimates the position of every picture in the final mosaic though global optimisation of image features position and

navigation data estimated by the dead reckoning sensors of the vehicle.

Here you can see the conceptual scheme of the algorithm and two georeferenced mosaics composed respectively of 500 and 300 images collected by an Autonomous Underwater Vehicle.

References:

L. Brignone, M. Munaro, AG. Allais, J. Opderbecke. " First sea trials of a laser aided three dimensional underwater image mosaicing technique". IEEE Oceans Conference, Santander, Spain 2011. People detection for video surveillance applications

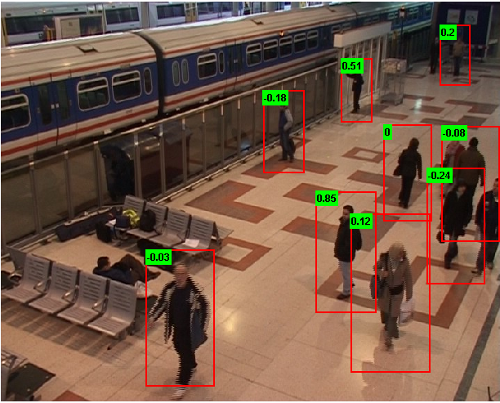

For my master thesis I worked on people detection algorithms for video surveillance applications.

I implemented and tested a technique based on Histogram of Oriented Gradients descriptor and Support Vector Machine classifier.

I evaluated the use of multiple classifiers for different people views and I showed how to obtain site-specific information that can

be important for the learning phase and can guarantee very good results and speed increasings in the detection phase.

Here the detection result for a frame of the PETS 2006 dataset is reported.

References:

M. Munaro, A. Cenedese. " Scene specific people detection by simple human interaction". Workshop on Human Interaction in Computer Vision (HICV), in 13th IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain 2011.